Advanced Tutorial

What to Expect from This Tutorial?

In this tutorial, you will create a table and insert a large dataset (two million rows of the New York taxi data). Then you will run queries on the dataset, including an example of how to create a dictionary and use it to perform a JOIN.

This tutorial assumes you have access to a running ClickHouse service. If not, check out the Quick Start.

1. Create a New Table

The New York City taxi data contains the details of millions of taxi rides, with columns like pickup and drop-off times and locations, cost, tip amount, tolls, payment type and so on. Let's create a table to store this data...

Connect to the SQL console

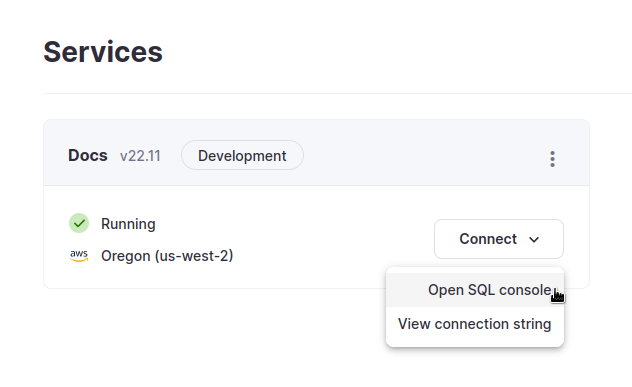

SQL consoleIf you need a SQL client connection, your ClickHouse Cloud service has an associated web based SQL console; expand Connect to SQL console below for details.

Connect to SQL console

From your ClickHouse Cloud services list, choose the service that you will work with and click Connect. From here you can Open SQL console:

If you are using self-managed ClickHouse you can connect to the SQL console at https://hostname:8443/play (check with your ClickHouse administrator for the details).

Create the following

tripstable in thedefaultdatabase:CREATE TABLE trips

(

`trip_id` UInt32,

`vendor_id` Enum8('1' = 1, '2' = 2, '3' = 3, '4' = 4, 'CMT' = 5, 'VTS' = 6, 'DDS' = 7, 'B02512' = 10, 'B02598' = 11, 'B02617' = 12, 'B02682' = 13, 'B02764' = 14, '' = 15),

`pickup_date` Date,

`pickup_datetime` DateTime,

`dropoff_date` Date,

`dropoff_datetime` DateTime,

`store_and_fwd_flag` UInt8,

`rate_code_id` UInt8,

`pickup_longitude` Float64,

`pickup_latitude` Float64,

`dropoff_longitude` Float64,

`dropoff_latitude` Float64,

`passenger_count` UInt8,

`trip_distance` Float64,

`fare_amount` Float32,

`extra` Float32,

`mta_tax` Float32,

`tip_amount` Float32,

`tolls_amount` Float32,

`ehail_fee` Float32,

`improvement_surcharge` Float32,

`total_amount` Float32,

`payment_type` Enum8('UNK' = 0, 'CSH' = 1, 'CRE' = 2, 'NOC' = 3, 'DIS' = 4),

`trip_type` UInt8,

`pickup` FixedString(25),

`dropoff` FixedString(25),

`cab_type` Enum8('yellow' = 1, 'green' = 2, 'uber' = 3),

`pickup_nyct2010_gid` Int8,

`pickup_ctlabel` Float32,

`pickup_borocode` Int8,

`pickup_ct2010` String,

`pickup_boroct2010` String,

`pickup_cdeligibil` String,

`pickup_ntacode` FixedString(4),

`pickup_ntaname` String,

`pickup_puma` UInt16,

`dropoff_nyct2010_gid` UInt8,

`dropoff_ctlabel` Float32,

`dropoff_borocode` UInt8,

`dropoff_ct2010` String,

`dropoff_boroct2010` String,

`dropoff_cdeligibil` String,

`dropoff_ntacode` FixedString(4),

`dropoff_ntaname` String,

`dropoff_puma` UInt16

)

ENGINE = MergeTree

PARTITION BY toYYYYMM(pickup_date)

ORDER BY pickup_datetime;

2. Insert the Dataset

Now that you have a table created, let's add the NYC taxi data. It is in CSV files in S3, and you can load the data from there.

The following command inserts ~2,000,000 rows into your

tripstable from two different files in S3:trips_1.tsv.gzandtrips_2.tsv.gz:INSERT INTO trips

SELECT * FROM s3(

'https://datasets-documentation.s3.eu-west-3.amazonaws.com/nyc-taxi/trips_{1..2}.gz',

'TabSeparatedWithNames', "

`trip_id` UInt32,

`vendor_id` Enum8('1' = 1, '2' = 2, '3' = 3, '4' = 4, 'CMT' = 5, 'VTS' = 6, 'DDS' = 7, 'B02512' = 10, 'B02598' = 11, 'B02617' = 12, 'B02682' = 13, 'B02764' = 14, '' = 15),

`pickup_date` Date,

`pickup_datetime` DateTime,

`dropoff_date` Date,

`dropoff_datetime` DateTime,

`store_and_fwd_flag` UInt8,

`rate_code_id` UInt8,

`pickup_longitude` Float64,

`pickup_latitude` Float64,

`dropoff_longitude` Float64,

`dropoff_latitude` Float64,

`passenger_count` UInt8,

`trip_distance` Float64,

`fare_amount` Float32,

`extra` Float32,

`mta_tax` Float32,

`tip_amount` Float32,

`tolls_amount` Float32,

`ehail_fee` Float32,

`improvement_surcharge` Float32,

`total_amount` Float32,

`payment_type` Enum8('UNK' = 0, 'CSH' = 1, 'CRE' = 2, 'NOC' = 3, 'DIS' = 4),

`trip_type` UInt8,

`pickup` FixedString(25),

`dropoff` FixedString(25),

`cab_type` Enum8('yellow' = 1, 'green' = 2, 'uber' = 3),

`pickup_nyct2010_gid` Int8,

`pickup_ctlabel` Float32,

`pickup_borocode` Int8,

`pickup_ct2010` String,

`pickup_boroct2010` String,

`pickup_cdeligibil` String,

`pickup_ntacode` FixedString(4),

`pickup_ntaname` String,

`pickup_puma` UInt16,

`dropoff_nyct2010_gid` UInt8,

`dropoff_ctlabel` Float32,

`dropoff_borocode` UInt8,

`dropoff_ct2010` String,

`dropoff_boroct2010` String,

`dropoff_cdeligibil` String,

`dropoff_ntacode` FixedString(4),

`dropoff_ntaname` String,

`dropoff_puma` UInt16

") SETTINGS input_format_try_infer_datetimes = 0Wait for the

INSERTto finish - it might take a moment for the 150 MB of data to be downloaded.NoteThe

s3function cleverly knows how to decompress the data, and theTabSeparatedWithNamesformat tells ClickHouse that the data is tab-separated and also to skip the header row of each file.When the insert is finished, verify it worked:

SELECT count() FROM tripsYou should see about 2M rows (1,999,657 rows, to be precise).

NoteNotice how quickly and how few rows ClickHouse had to process to determine the count? You can get back the count in 0.001 seconds with only 6 rows processed.

If you run a query that needs to hit every row, you will notice considerably more rows need to be processed, but the run time is still blazing fast:

SELECT DISTINCT(pickup_ntaname) FROM tripsThis query has to process 2M rows and return 190 values, but notice it does this in about 1 second. The

pickup_ntanamecolumn represents the name of the neighborhood in New York City where the taxi ride originated.

3. Analyze the Data

Let's run some queries to analyze the 2M rows of data...

We will start with some simple calculations, like computing the average tip amount:

SELECT round(avg(tip_amount), 2) FROM tripsThe response is:

┌─round(avg(tip_amount), 2)─┐

│ 1.68 │

└───────────────────────────┘This query computes the average cost based on the number of passengers:

SELECT

passenger_count,

ceil(avg(total_amount),2) AS average_total_amount

FROM trips

GROUP BY passenger_countThe

passenger_countranges from 0 to 9:┌─passenger_count─┬─average_total_amount─┐

│ 0 │ 22.69 │

│ 1 │ 15.97 │

│ 2 │ 17.15 │

│ 3 │ 16.76 │

│ 4 │ 17.33 │

│ 5 │ 16.35 │

│ 6 │ 16.04 │

│ 7 │ 59.8 │

│ 8 │ 36.41 │

│ 9 │ 9.81 │

└─────────────────┴──────────────────────┘Here is a query that calculates the daily number of pickups per neighborhood:

SELECT

pickup_date,

pickup_ntaname,

SUM(1) AS number_of_trips

FROM trips

GROUP BY pickup_date, pickup_ntaname

ORDER BY pickup_date ASCThe result looks like:

┌─pickup_date─┬─pickup_ntaname───────────────────────────────────────────┬─number_of_trips─┐

│ 2015-07-01 │ Brooklyn Heights-Cobble Hill │ 13 │

│ 2015-07-01 │ Old Astoria │ 5 │

│ 2015-07-01 │ Flushing │ 1 │

│ 2015-07-01 │ Yorkville │ 378 │

│ 2015-07-01 │ Gramercy │ 344 │

│ 2015-07-01 │ Fordham South │ 2 │

│ 2015-07-01 │ SoHo-TriBeCa-Civic Center-Little Italy │ 621 │

│ 2015-07-01 │ Park Slope-Gowanus │ 29 │

│ 2015-07-01 │ Bushwick South │ 5 │

This query computes the length of the trip and groups the results by that value:

SELECT

avg(tip_amount) AS avg_tip,

avg(fare_amount) AS avg_fare,

avg(passenger_count) AS avg_passenger,

count() AS count,

truncate(date_diff('second', pickup_datetime, dropoff_datetime)/60) as trip_minutes

FROM trips

WHERE trip_minutes > 0

GROUP BY trip_minutes

ORDER BY trip_minutes DESCThe result looks like:

┌──────────────avg_tip─┬───────────avg_fare─┬──────avg_passenger─┬──count─┬─trip_minutes─┐

│ 1.9600000381469727 │ 8 │ 1 │ 1 │ 27511 │

│ 0 │ 12 │ 2 │ 1 │ 27500 │

│ 0.542166673981895 │ 19.716666666666665 │ 1.9166666666666667 │ 60 │ 1439 │

│ 0.902499997522682 │ 11.270625001192093 │ 1.95625 │ 160 │ 1438 │

│ 0.9715789457909146 │ 13.646616541353383 │ 2.0526315789473686 │ 133 │ 1437 │

│ 0.9682692398245518 │ 14.134615384615385 │ 2.076923076923077 │ 104 │ 1436 │

│ 1.1022105210705808 │ 13.778947368421052 │ 2.042105263157895 │ 95 │ 1435 │

This query shows the number of pickups in each neighborhood, broken down by hour of the day:

SELECT

pickup_ntaname,

toHour(pickup_datetime) as pickup_hour,

SUM(1) AS pickups

FROM trips

WHERE pickup_ntaname != ''

GROUP BY pickup_ntaname, pickup_hour

ORDER BY pickup_ntaname, pickup_hourThe result looks like:

┌─pickup_ntaname───────────────────────────────────────────┬─pickup_hour─┬─pickups─┐

│ Airport │ 0 │ 3509 │

│ Airport │ 1 │ 1184 │

│ Airport │ 2 │ 401 │

│ Airport │ 3 │ 152 │

│ Airport │ 4 │ 213 │

│ Airport │ 5 │ 955 │

│ Airport │ 6 │ 2161 │

│ Airport │ 7 │ 3013 │

│ Airport │ 8 │ 3601 │

│ Airport │ 9 │ 3792 │

│ Airport │ 10 │ 4546 │

│ Airport │ 11 │ 4659 │

│ Airport │ 12 │ 4621 │

│ Airport │ 13 │ 5348 │

│ Airport │ 14 │ 5889 │

│ Airport │ 15 │ 6505 │

│ Airport │ 16 │ 6119 │

│ Airport │ 17 │ 6341 │

│ Airport │ 18 │ 6173 │

│ Airport │ 19 │ 6329 │

│ Airport │ 20 │ 6271 │

│ Airport │ 21 │ 6649 │

│ Airport │ 22 │ 6356 │

│ Airport │ 23 │ 6016 │

│ Allerton-Pelham Gardens │ 4 │ 1 │

│ Allerton-Pelham Gardens │ 6 │ 1 │

│ Allerton-Pelham Gardens │ 7 │ 1 │

│ Allerton-Pelham Gardens │ 9 │ 5 │

│ Allerton-Pelham Gardens │ 10 │ 3 │

│ Allerton-Pelham Gardens │ 15 │ 1 │

│ Allerton-Pelham Gardens │ 20 │ 2 │

│ Allerton-Pelham Gardens │ 23 │ 1 │

│ Annadale-Huguenot-Prince's Bay-Eltingville │ 23 │ 1 │

│ Arden Heights │ 11 │ 1 │Let's look at rides to LaGuardia or JFK airports:

SELECT

pickup_datetime,

dropoff_datetime,

total_amount,

pickup_nyct2010_gid,

dropoff_nyct2010_gid,

CASE

WHEN dropoff_nyct2010_gid = 138 THEN 'LGA'

WHEN dropoff_nyct2010_gid = 132 THEN 'JFK'

END AS airport_code,

EXTRACT(YEAR FROM pickup_datetime) AS year,

EXTRACT(DAY FROM pickup_datetime) AS day,

EXTRACT(HOUR FROM pickup_datetime) AS hour

FROM trips

WHERE dropoff_nyct2010_gid IN (132, 138)

ORDER BY pickup_datetimeThe response is:

┌─────pickup_datetime─┬────dropoff_datetime─┬─total_amount─┬─pickup_nyct2010_gid─┬─dropoff_nyct2010_gid─┬─airport_code─┬─year─┬─day─┬─hour─┐

│ 2015-07-01 00:04:14 │ 2015-07-01 00:15:29 │ 13.3 │ -34 │ 132 │ JFK │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:09:42 │ 2015-07-01 00:12:55 │ 6.8 │ 50 │ 138 │ LGA │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:23:04 │ 2015-07-01 00:24:39 │ 4.8 │ -125 │ 132 │ JFK │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:27:51 │ 2015-07-01 00:39:02 │ 14.72 │ -101 │ 138 │ LGA │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:32:03 │ 2015-07-01 00:55:39 │ 39.34 │ 48 │ 138 │ LGA │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:34:12 │ 2015-07-01 00:40:48 │ 9.95 │ -93 │ 132 │ JFK │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:38:26 │ 2015-07-01 00:49:00 │ 13.3 │ -11 │ 138 │ LGA │ 2015 │ 1 │ 0 │

│ 2015-07-01 00:41:48 │ 2015-07-01 00:44:45 │ 6.3 │ -94 │ 132 │ JFK │ 2015 │ 1 │ 0 │

│ 2015-07-01 01:06:18 │ 2015-07-01 01:14:43 │ 11.76 │ 37 │ 132 │ JFK │ 2015 │ 1 │ 1 │

4. Create a Dictionary

If you are new to ClickHouse, it is important to understand how dictionaries work. A simple way of thinking about a dictionary is a mapping of key->value pairs that is stored in memory. The details and all the options for dictionaries are linked at the end of the tutorial.

Let's see how to create a dictionary associated with a table in your ClickHouse service. The table and therefore the dictionary, will be based on a CSV file that contains 265 rows, one row for each neighborhood in NYC. The neighborhoods are mapped to the names of the NYC boroughs (NYC has 5 boroughs: the Bronx, Brooklyn, Manhattan, Queens and Staten Island), and this file counts Newark Airport (EWR) as a borough as well.

This is part of the CSV file (shown as a table for clarity). The

LocationIDcolumn in the file maps to thepickup_nyct2010_gidanddropoff_nyct2010_gidcolumns in yourtripstable:LocationID Borough Zone service_zone 1 EWR Newark Airport EWR 2 Queens Jamaica Bay Boro Zone 3 Bronx Allerton/Pelham Gardens Boro Zone 4 Manhattan Alphabet City Yellow Zone 5 Staten Island Arden Heights Boro Zone

The URL for the file is

https://datasets-documentation.s3.eu-west-3.amazonaws.com/nyc-taxi/taxi_zone_lookup.csv. Run the following SQL, which creates a Dictionary namedtaxi_zone_dictionaryand populates the dictionary from the CSV file in S3:CREATE DICTIONARY taxi_zone_dictionary

(

`LocationID` UInt16 DEFAULT 0,

`Borough` String,

`Zone` String,

`service_zone` String

)

PRIMARY KEY LocationID

SOURCE(HTTP(URL 'https://datasets-documentation.s3.eu-west-3.amazonaws.com/nyc-taxi/taxi_zone_lookup.csv' FORMAT 'CSVWithNames'))

LIFETIME(MIN 0 MAX 0)

LAYOUT(HASHED_ARRAY())NoteSetting

LIFETIMEto 0 means this dictionary will never update with its source. It is used here to not send unnecessary traffic to our S3 bucket, but in general you could specify any lifetime values you prefer.For example:

LIFETIME(MIN 1 MAX 10)specifies the dictionary to update after some random time between 1 and 10 seconds. (The random time is necessary in order to distribute the load on the dictionary source when updating on a large number of servers.)

Verify it worked - you should get 265 rows (one row for each neighborhood):

SELECT * FROM taxi_zone_dictionaryUse the

dictGetfunction (or its variations) to retrieve a value from a dictionary. You pass in the name of the dictionary, the value you want, and the key (which in our example is theLocationIDcolumn oftaxi_zone_dictionary).For example, the following query returns the

BoroughwhoseLocationIDis 132 (which as we saw above is JFK airport):SELECT dictGet('taxi_zone_dictionary', 'Borough', 132)JFK is in Queens, and notice the time to retrieve the value is essentially 0:

┌─dictGet('taxi_zone_dictionary', 'Borough', 132)─┐

│ Queens │

└─────────────────────────────────────────────────┘

1 rows in set. Elapsed: 0.004 sec.Use the

dictHasfunction to see if a key is present in the dictionary. For example, the following query returns 1 (which is "true" in ClickHouse):SELECT dictHas('taxi_zone_dictionary', 132)The following query returns 0 because 4567 is not a value of

LocationIDin the dictionary:SELECT dictHas('taxi_zone_dictionary', 4567)Use the

dictGetfunction to retrieve a borough's name in a query. For example:SELECT

count(1) AS total,

dictGetOrDefault('taxi_zone_dictionary','Borough', toUInt64(pickup_nyct2010_gid), 'Unknown') AS borough_name

FROM trips

WHERE dropoff_nyct2010_gid = 132 OR dropoff_nyct2010_gid = 138

GROUP BY borough_name

ORDER BY total DESCThis query sums up the number of taxi rides per borough that end at either the LaGuardia or JFK airport. The result looks like the following, and notice there are quite a few trips where the pickup neighborhood is unknown:

┌─total─┬─borough_name──┐

│ 23683 │ Unknown │

│ 7053 │ Manhattan │

│ 6828 │ Brooklyn │

│ 4458 │ Queens │

│ 2670 │ Bronx │

│ 554 │ Staten Island │

│ 53 │ EWR │

└───────┴───────────────┘

7 rows in set. Elapsed: 0.019 sec. Processed 2.00 million rows, 4.00 MB (105.70 million rows/s., 211.40 MB/s.)

5. Perform a Join

Let's write some queries that join the taxi_zone_dictionary with your trips table.

We can start with a simple JOIN that acts similarly to the previous airport query above:

SELECT

count(1) AS total,

Borough

FROM trips

JOIN taxi_zone_dictionary ON toUInt64(trips.pickup_nyct2010_gid) = taxi_zone_dictionary.LocationID

WHERE dropoff_nyct2010_gid = 132 OR dropoff_nyct2010_gid = 138

GROUP BY Borough

ORDER BY total DESCThe response looks familiar:

┌─total─┬─Borough───────┐

│ 7053 │ Manhattan │

│ 6828 │ Brooklyn │

│ 4458 │ Queens │

│ 2670 │ Bronx │

│ 554 │ Staten Island │

│ 53 │ EWR │

└───────┴───────────────┘

6 rows in set. Elapsed: 0.034 sec. Processed 2.00 million rows, 4.00 MB (59.14 million rows/s., 118.29 MB/s.)NoteNotice the output of the above

JOINquery is the same as the query before it that useddictGetOrDefault(except that theUnknownvalues are not included). Behind the scenes, ClickHouse is actually calling thedictGetfunction for thetaxi_zone_dictionarydictionary, but theJOINsyntax is more familiar for SQL developers.We do not use

SELECT *often in ClickHouse - you should only retrieve the columns you actually need! But it is difficult to find a query that takes a long time, so this query purposely selects every column and returns every row (except there is a built-in 10,000 row maximum in the response by default), and also does a right join of every row with the dictionary:SELECT *

FROM trips

JOIN taxi_zone_dictionary

ON trips.dropoff_nyct2010_gid = taxi_zone_dictionary.LocationID

WHERE tip_amount > 0

ORDER BY tip_amount DESC

LIMIT 1000

Congrats!

Well done - you made it through the tutorial, and hopefully you have a better understanding of how to use ClickHouse. Here are some options for what to do next:

- Read how primary keys work in ClickHouse - this knowledge will move you a long ways forward along your journey to becoming a ClickHouse expert

- Integrate an external data source like files, Kafka, PostgreSQL, data pipelines, or lots of other data sources

- Connect your favorite UI/BI tool to ClickHouse

- Check out the SQL Reference and browse through the various functions. ClickHouse has an amazing collection of functions for transforming, processing and analyzing data

- Learn more about Dictionaries